Aug 22, 2024

Post-quantum Cryptography in 2024

Explore post-quantum cryptography’s rise in 2024 and how new standards prepare us for future quantum attacks, ensuring secure data.

Imagine it’s 2040, and the first quantum computer capable of real cryptographic attacks comes online. Governments, businesses, and other individuals or organizations may have data from the past that is protected by pre-quantum (classical computer) encryption and some of that data might still have some value to someone in 2040. If the classically protected data is collected, then later if possible quantum cryptographic attacks are in use against it, and an attacker might be able to access the secrets.

We call this scenario the “harvest now, decrypt later” attack. If data is collected, such as from access gained by another attack, even if we can’t attack the privacy of the data with modern (classical) computers in 2024, we may be able to attack it with quantum computers in the future.

Today’s quantum computers can do some math, and are improving. Integer factorization is a task that can be done more effectively with quantum computers compared to classical computers. As quantum computers improve, their ability to take on larger factorization problems increases, which puts some classical cryptographic algorithms at risk of being broken or weakened.

Both RSA and EC algorithms utilize integer factorization of large numbers as part of the difficult math problems that make them secure. RSA is used widely for identity, while EC is used for both identity and Diffie-Hellman key exchange, in modern systems.

So on August 2nd, 2016, NIST announced the request for submissions of encryption algorithms that handle the known theoretical future quantum attacks. Now in 2024, the first set of post-quantum cryptography (PQC) standards were published by NIST on August 13th.

These standards are FIPS 203, 204, and 205. This group of standards and algorithms, along with the FIPS 206 coming soon, can be used to prepare us for future theoretical attacks from quantum computers. Because of the “harvest now, decrypt later” attack vector, there is a sense of urgency for sensitive long lived secrets to be better protected.

National Institute of Standards and Technology (2024) Module-Lattice-Based Key Encapsulation Mechanism Standard. (Department of Commerce, Washington, D.C.), Federal Information Processing Standards Publication (FIPS) NIST FIPS 203. https://doi.org/10.6028/NIST.FIPS.203

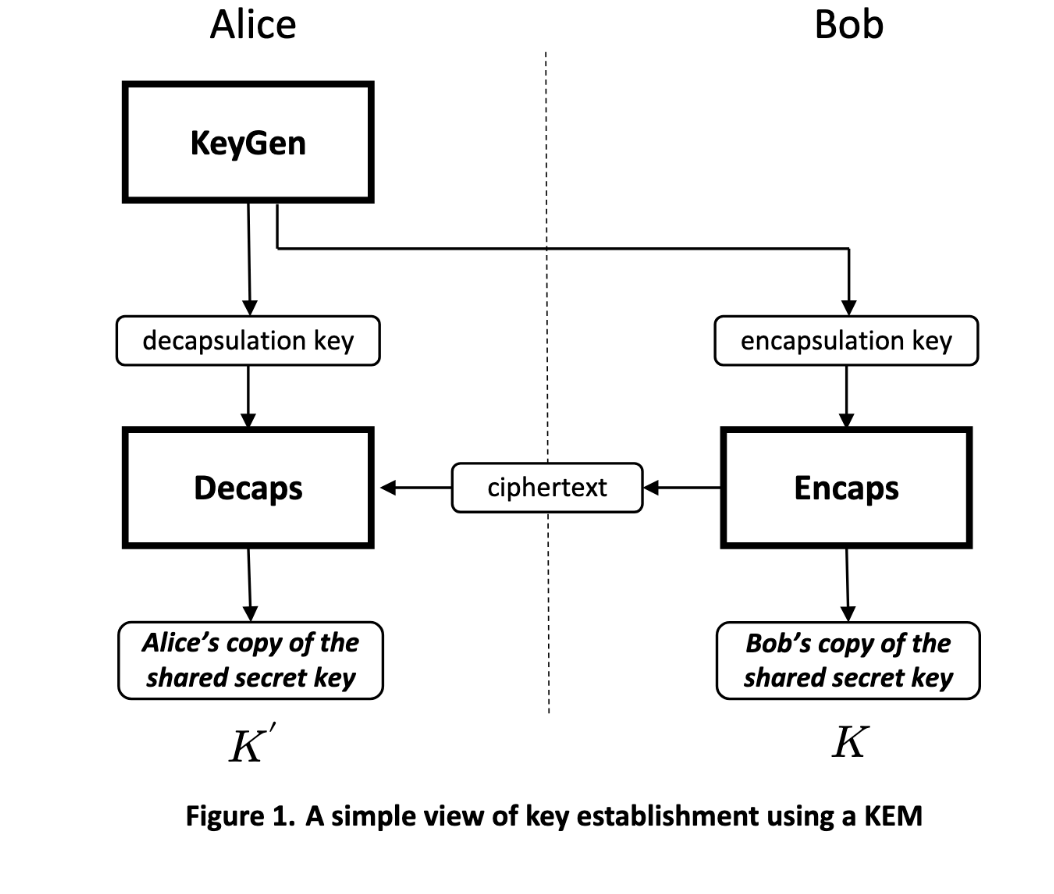

Module-lattice key encapsulation mechanism (ML-KEM), is a technique we can use to protect ephemeral TLS secrets from “harvest now, decrypt later” attacks. We can apply it in a hybrid cryptographic system today using a TLSv1.3 extension. The module-lattice cryptography of ML-KEM is based on “Learning With Errors” (LWE) math problem applied to geometric lattice structures. A simple way to think about it is that points on lattices are the public and private keys, and the relationship between the points is part of the LWE problem. We then use these keys to encrypt other public keys used to derive a shared secret, or otherwise encapsulate a shared secret directly. The shared secret is then the key used in a symmetric cipher like AES256 to encrypt the actual data.

National Institute of Standards and Technology (2024) Module-Lattice-Based Digital Signature Standard. (Department of Commerce, Washington, D.C.), Federal Information Processing Standards Publication (FIPS) NIST FIPS 204. https://doi.org/10.6028/NIST.FIPS.204

Module-lattice digital signature algorithm (ML-DSA), is an approach to digital signatures. We can use Dilithium for making signatures that can hopefully endure classical and quantum cryptographic attacks. PQC TLS (HTTPS) certificates may use Dilithium for cryptographic identity. The module-lattice cryptography of ML-DSA is based on a “Fiat-Shamir with Aborts” approach.

This Fiat-Shamir with Aborts approach creates a non-interactive proofusing a hash function, and it aborts the operation if invalid material is used. This helps improve the security of the system, and can reduce network or other resource use.

National Institute of Standards and Technology (2024) Stateless Hash-Based Digital Signature Standard. (Department of Commerce, Washington, D.C.), Federal Information Processing Standards Publication (FIPS) NIST FIPS 205. https://doi.org/10.6028/NIST.FIPS.205

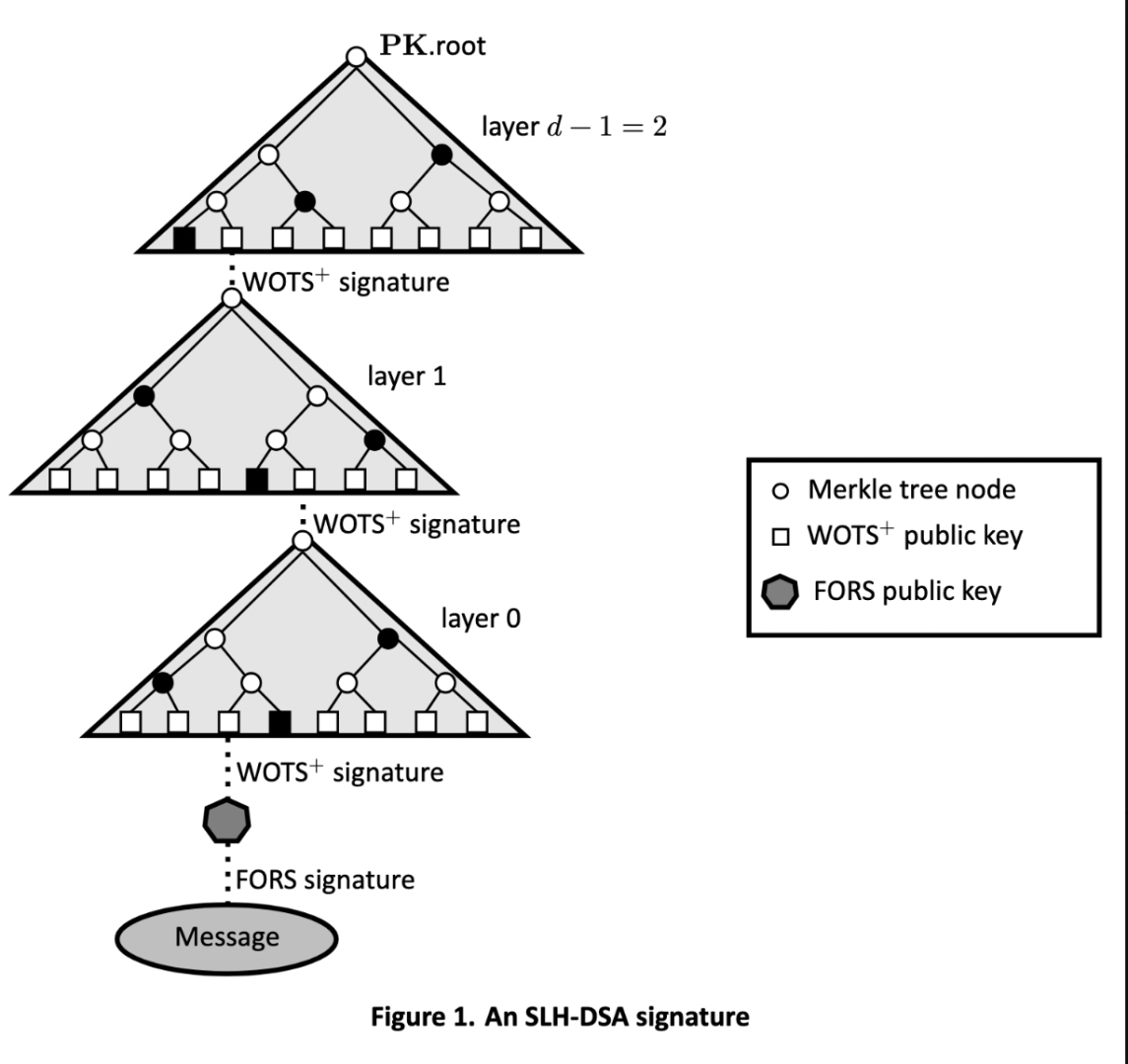

Stateless hash-based digital signature algorithm (SLH-DSA), is another approach to digital signatures. This technique does not use lattices, but instead uses stateless hashing in a combination of techniques and algorithms. In SLH-DSA, we create a series of Merkle trees (hash trees), taking the output of the first tree as the input to the second tree, creating a hash tree forest (FORS) from a selected pathway through multiple hash trees. The signature is created by combining the FORS and the result of the hashing to create the cryptographic key used to sign the message.

Not all classical ciphers appear broken in a quantum world, but many do, and the resulting security holes have wide theoretical impact.

As far as the math theory has lead us, there are cryptographic components we have today that are likely to hold up. Many classical algorithms are used in the PQC algorithms. These include AES256 symmetric encryption and SHAKE extendable output functions (XOFs). SHAKE is part of SHA-3 family of functions, also see FIPS 202 for more about SHAKE. TLS can use AES256, but the foremost issue on the TLS side is the EC used in the ephemeral key exchange, which is how the shared secret is derived. That shared secret is then the symmetric secret key in the AES256 encryption of the session payloads. So just because we have AES256 doesn’t mean we are guaranteed protection.

It will take the world many years to get to a better posture against the “harvest now, decrypt later” attack vector.

One of the first things we should do is get an inventory of all of our cryptographic components. We then want to measure and document each of those components, without leaking or exposing them. The measurements should include the expiration or age of the material, as well as the bit strength and algorithms. Ideally, we also know which customers or business partners rely on that material.

From there, we can prioritize the list of cryptographic components based on the greatest need for PQC.

Internet facing TLS is one area likely to move up in the priority list. This is the case because we have a hybrid PQC mechanism working and available, and the ciphertext data is traversing the internet, leaving the confines of our control. Even if it isn’t traversing the internet, because TLS (HTTPS) passes through our routers, firewalls, proxies, and multiple physical mediums, the exposure is much greater. Systems like medical, financial, and human resource systems may be a priority because of the nature of the PII that moves between devices for those systems. The current solution to this is to use hybrid PQC to layer classical and quantum algorithms. It is accomplished for TLS using a TLSv1.3 extension.

TLSv1.3 was designed in a modular way, so this extension mechanism can be used to mix new code to include additional ciphers. Now in 2024, we use the KEM to protect the EC, and leave all of the rest of it classical. There are approaches for hybrid TLSv1.3, some following closely to FIPS and others that have diverged, being adopted and shipped by IBM, AWS, Cloudflare, and others. The hybrid leverages multiple key exchange techniques, so that if EC is broken, the Kyber encapsulation can still protect it.

After hybrid TLS, another aspect that is likely a higher priority on the list, is long-lived identities that are expected to last 10 years from now or longer. These extra long-lived identities might be PKI components like certificate authority signing certificates, such as for code signing or other uses where a root key might not ever change. For these instances of long-lived identity, the amount of tech debt is much more diverse than the TLS situation. We might use ML-DSA certificates to solve for these long-lived identities. There will need to be more software developed and adopted, in some cases specific to tools and automation used within an organization, to close this PQC gap.

Some aspects of PQC migration will likely take nearly a decade, and not everyone is going to do it. While the United States government is adopting PQC, not all departments need to take action today. There are many complex systems that are central to security that will ideally want to be replaced before a quantum era. This will likely be an extremely expensive journey for the whole world. But if we plan well, those costs should be reduced.

Cryptographic agility, or “crypto agility”, is a property of systems, to be able to use modular cryptographic components, and be able to change them effectively. Many current systems are not like that.

We have gone through many major cryptographic migrations since the standards for HTTPS (SSL then TLS) have been adopted. And it took many organizations eight years to migrate away from TLSv1.0 and SHA1. The PQC migration will really be many migrations in different areas, with multiple phases of adoption and blending support with classical algorithms.

In order to save money and reduce risk, we take on the design pattern of “crypto agility”. We can apply this to our software systems by selecting libraries and frameworks that enable adding and modifying modular components for cryptographic operations, without needing to perform an entire system migration or need to rewrite all of the software systems at once to adopt new cryptography.

We don’t want to mistakenly weaken our security by making the cryptography so flexible an attacker can manipulate it. Rather, we want to compile our programs using modular libraries to control the capabilities and algorithms that are available. There are of course still CI/CD and supply chain attacks to consider. Selecting and validating our supply chains for cryptographic components will also be an important task as we plan for PQC adoption.

Integer factorization is not considered a big enough problem for cryptographic security against quantum computer attacks. This means many existing (classical) cryptographic mechanisms have risks. RSA and EC are both at risk, while others may have partial damage. Most of the classical strong symmetric encryption and hash functions have some theoretical resistance to quantum attacks, although we can’t know for sure. Similarly, we don’t know for sure if the PQC algorithms will hold up, but they are the best we have today.

Key encapsulation in hybrid cryptosystems is perhaps the most mature PQC available for production use today in terms of software adoption. End-to-end PQC systems (full adoption across all components) may take many years yet to bring into reality. The costs and risks are high, so now is a great time to plan and design intelligently so that we can make these changes more easily as we go forward.

Stay in the know: Become an OffSec Insider

Get the latest updates about resources, events & promotions from OffSec!